Timeout issues on uWSGI and Nginx proxying are very common when setting up the environment first time. This particular setup I was workin on was working perfectly fine on my local machine. The page actually works in the background more than 60 seconds and generates enormously large CSV file. All the timeouts on uWSGI and Nginx were set up with significant large numbers (much much more than 60 secs).

The deployment on AWS was constantly dropping the connection after 60 seconds with HTTP 504 Gateway Timeout on Firefox and 499 in Nginx access logs.

When setting error logs into debug mode, you see something similar to one below. It is not clear why the client drops the connection and it happends on all browsers.

2016/05/20 08:30:32 [info] 1074#0: *2 epoll_wait() reported that client prematurely closed connection, so upstream connection is closed too while sending request to upstream, client: xxx.xxx.xxx.xxx, server: _, request: "GET /my-page/?some-param=1 HTTP/1.1", upstream: "uwsgi://unix:/app.sock:", host: "example.com", referrer: "http://example.com/report/"

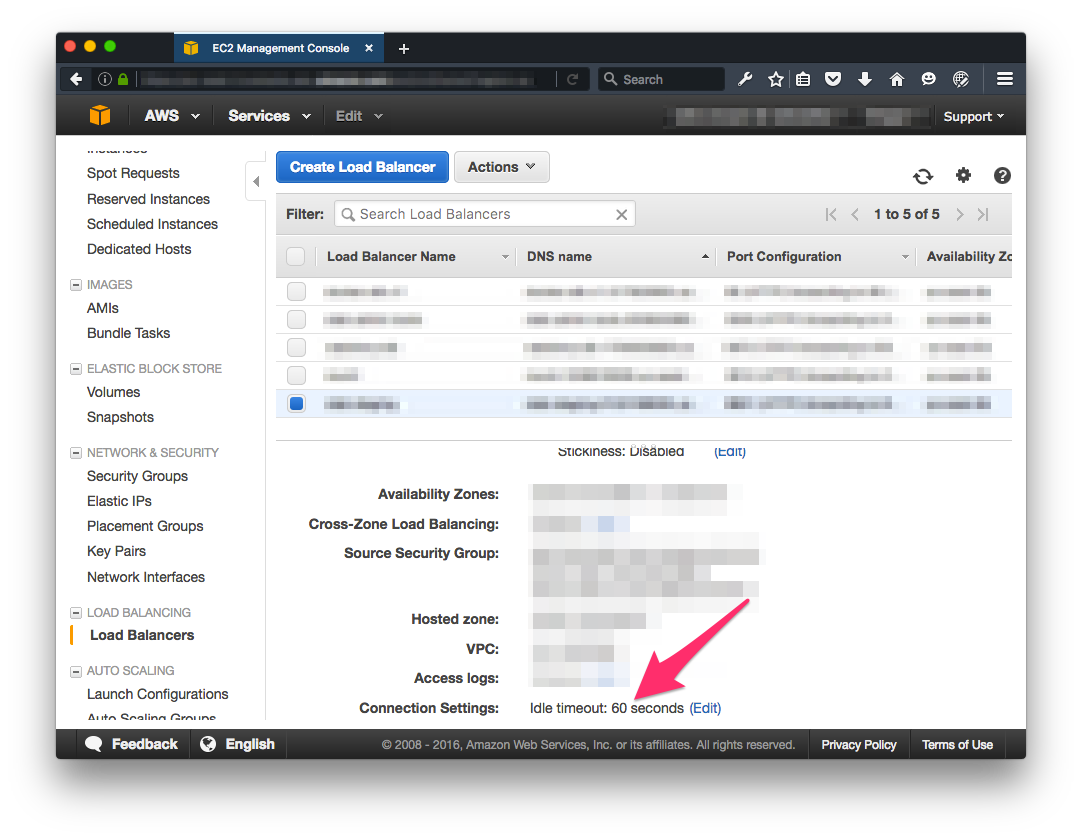

The solution I found was in this answer on StackOverflow. If you have a load balancer with pretty much default configurations it will drop the connection after 60 seconds.

Change it to match with your Nginx configuration and you are done.